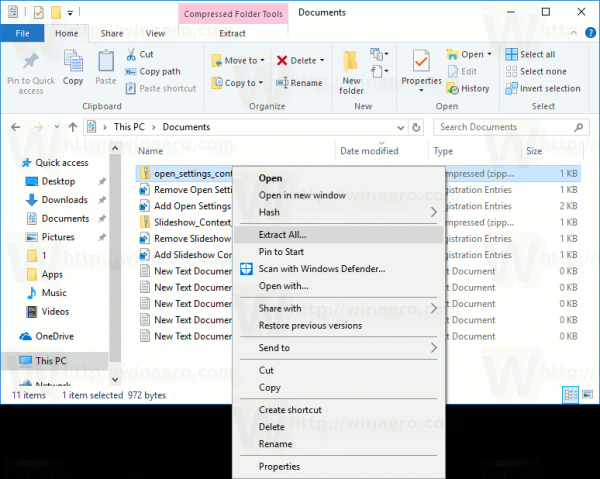

- EXTRACT ALL LINKS FROM A WEB PAGE INSTALL

- EXTRACT ALL LINKS FROM A WEB PAGE FULL

- EXTRACT ALL LINKS FROM A WEB PAGE ZIP

The ‘find_all’ function is used to extract text from the webpage data. The ‘BeautifulSoup’ function is used to extract text from the webpage.

The url is opened, and data is read from it. The required packages are imported, and aliased.

Print(link.get('href')) Output The href links are : Soup = BeautifulSoup(req.text, "html.parser")

EXTRACT ALL LINKS FROM A WEB PAGE INSTALL

The below line can be run to install BeautifulSoup on Windows − pip install beautifulsoup4įollowing is an example − Example from bs4 import BeautifulSoup Web scraping can also be used to extract data for research purposes, understand/compare market trends, perform SEO monitoring, and so on.

EXTRACT ALL LINKS FROM A WEB PAGE FULL

In the creation of a sitemap if you want to generate full sitemap manually. Extract links from website and check the status if those are broken or working. It helps in web scraping, which is a process of extracting, using, and manipulating the data from different resources. Extract all links from a website To find out calculate external and internal link on your webpage. Soup = BeautifulSoup(requests.get("").BeautifulSoup is a third party Python library that is used to parse data from web pages. Python's BeautifulSoup hasn't been shown yet in this thread: import requests NodeJS has cheerio: const axios = require("axios") Ĭonst $ = cheerio.load((await axios.get("")).data) You can copy/paste any HTML document in the text area and hit the Extract URLs button to get list of all unique links on the HTML page. Go to web page to navigate to the home page of bing search Search for. Ruby has the nokogiri gem: #! /usr/bin/env ruby Extracting Links from a webpage Read from Excel worksheet (Value of single cell. However, the cmdlet enables you to do much more than just download files you can use it to analyze to contents of web pages and use the information in your scripts. Sample usage (including writing to file per OP request usage is the same for any script here with a shebang): $. You use Invoke-WebRequest to download files from the web via HTTP and HTTPS. $parser->parse(get($url) or die "Failed to GET $url")

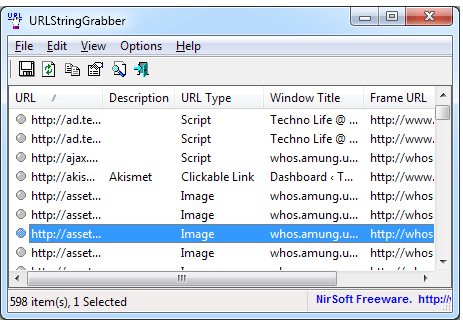

Wouldn't work well due to the selection method, source page can be hundreds of pages long. When they are highlighted, press and hold Control while letting go of the right mouse button. Tip: Click the 'Lock' button to keep the current list of links even if you browse to other. Example: Type 'mp3' in the 'Quick find' field to find all mp3 files on the page.

EXTRACT ALL LINKS FROM A WEB PAGE ZIP

My $parser = HTML::Parser->new(api_version => 3, start_h => ) That could be done using webread to retrieve data from the webpage and regexp to extract all the hyperlinks in the page by parsing through the retrieved. Hold down the right mouse button and drag a selection around the links. The links panel extracts all links in the current webpage so you can easily find images, music, video clips, zip files and other downloads, as well as links to other pages. My $url = shift or die "No argument URL provided" | xmllint -html -xpath 2>/dev/null - \Īdditionally, Perl offers HTML::Parser: #!/usr/bin/perl As discussed in other answers, Lynx is a great option, but there are many others in nearly every programming language and environment.Īnother choice is xmllint.

0 kommentar(er)

0 kommentar(er)